Need to handle large file uploads in your web app? Here's our experience using the tus protocol to support resumable uploads with a React frontend and a Spring Boot backend — and how we tackled network issues during testing.

For a recent project, we implemented support for users to upload large files (approximately 20 GB) to the system via a Web application. Given the expected deployment and usage of the system, we assumed that users might have a relatively slow or unreliable network connection. Therefore, such files could take many minutes or even hours to upload. We wanted to ensure the user wouldn't lose their progress in case of network disruptions during the process.

To implement support for resumable uploads, we adopted the tus protocol, which is quickly becoming the de facto standard for this. We believe this is a good opportunity to share our experience with it, including some tips for those who need to work with it and test it.

The tus protocol has been designed as a simple specification to implement resumable file uploads: that is, an upload process in which the client can resume an interrupted upload later (e.g., if the client lost network access at some point during the upload) without losing their progress. While a variety of other solutions exist (e.g., Resumable.js), tus is widely adopted: for example, by Cloudflare, Supabase, and Vimeo. It was introduced by the Transloadit team, and its specification and reference implementations are open-source under the MIT license. The IETF is also standardizing part of the protocol in the Internet draft "Resumable Uploads for HTTP".

The current tus 1.0 protocol consists of a very small core plus a number of extensions.

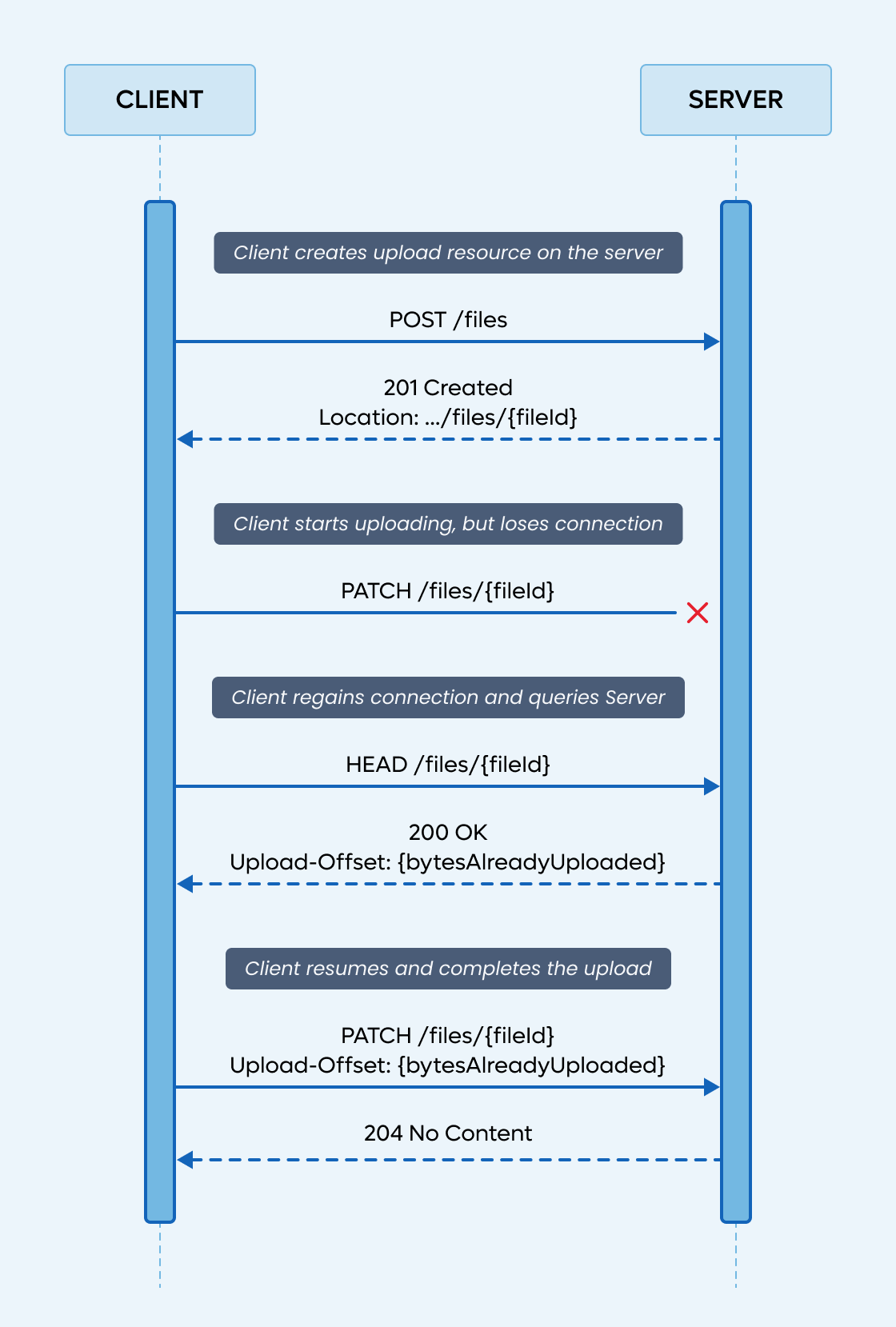

The core consists of two main operations to work with resources that represent uploads in progress:

The core protocol doesn't specify how these resources are created on the server, as that may vary across different implementations. The Creation extension, if implemented, lets clients create new upload resources with a POST request.

Other optional protocol extensions include:

Note that tus does not require files to be uploaded in chunks of a fixed size. Indeed, the client can attempt to upload the entire file in a single PATCH request (or in the initial POST request using the Creation with Upload extension). If the request fails at any point, the client can later send a HEAD request to retrieve the offset up to which the data was received and stored by the server. This assumes, of course, that the server begins writing the data as it is received, rather than waiting for the entire payload. The client then restarts the upload from that offset with a PATCH request. This chunkless approach is recommended by the tus-js-client implementation as the option with the best upload performance (since using fixed chunks involves more requests and therefore more overhead, though probably negligible for chunks of tens of MBs).

For a full description of the protocol, check out the official specification.

The tus website lists several implementations of the server and client sides of the protocol, both official implementations by the protocol developers and third-party ones.

In our case, we were developing a Web application with a TypeScript/React/Next.js frontend and a backend using Java and Spring Boot. We used the official tus-js-client in the frontend (client-side in the browser, not on the server-side of Next.js) and the third-party tus-java-server in the backend.

We found both libraries to be well documented, easy to use, and reliable in our use case.

We were initially unsure about tus-java-server, as it's a third-party library with no recent activity and doesn't appear to be widely used. However, we didn't have any issues with it. The library is also simple and well-structured enough that it should be easy to fork and edit if needed. As an alternative for the backend, we had considered tusd, the official reference implementation in Go, which can be deployed as a standalone service. However, making it work with our backend to integrate file upload (which would be handled by tusd) with validation and metadata storage (handled by our backend), while probably doable using hooks, would have complicated the architecture and upload flow.

The tus file upload service is initialized as follows:

new TusFileUploadService()

.withUploadUri(/* base URI for file upload, e.g., "/files" */)

.withStoragePath(/* path to store uploads and metadata */)

.withUploadExpirationPeriod(/* milliseconds after which an unfinished upload expires */);This uses the default storage, which saves uploads (including unfinished ones) and metadata in the file system.

This is the gist of handling a tus request:

@RequestMapping(

value = {/* base upload URI */, /* base upload URI */ + "/**"},

method = {RequestMethod.POST, RequestMethod.PATCH, RequestMethod.HEAD, RequestMethod.DELETE}

)

public void upload(

jakarta.servlet.http.HttpServletRequest servletRequest,

jakarta.servlet.http.HttpServletResponse servletResponse

) {

String requestURI = servletRequest.getRequestURI();

tusFileUploadService.process(servletRequest, servletResponse);

// After processing the request, check if the upload is completed

UploadInfo uploadInfo = tusFileUploadService.getUploadInfo(requestURI);

if (uploadInfo == null || uploadInfo.isUploadInProgress()) {

return;

}

// If it is completed, do whatever you need to do with it

try {

processCompletedUpload(uploadInfo, requestURI);

} finally {

// Remove the data for the upload once it is completed

tusFileUploadService.deleteUpload(requestURI);

}

}If the client never completes an upload, tus-java-server will consider it expired after the configurable expiration period. You should call tusFileUploadService.cleanup() in a periodic maintenance task to delete expired data to keep used storage under control.

The tus-js-client documentation provides a good example of how to start and resume an upload. The library also includes support for automatic retries after a delay in the event of a network error.

Note that, by default, the client stores the started uploads in local storage, including a fingerprint of the file; this storage can be queried when restarting an upload. This allows users to resume uploads even if they have closed the tab or restarted the browser, but it requires a suitable UX to be understandable. In the simpler case, where resuming is only supported if the user still has the tab open (e.g., showing the upload progress bar, the network error message, and a prompt to resume), this is not necessary, and the storage can be disabled by setting storeFingerprintForResuming to false.

We've encountered a few additional difficulties when testing that resuming uploads work correctly, both manually and in automated tests using Playwright. So here are a couple of tips about this.

To test it, we needed to simulate the browser going offline while uploading. Even for manual testing, simulating this is clearly less cumbersome than actually putting the browser offline (e.g., disabling Wi-Fi).

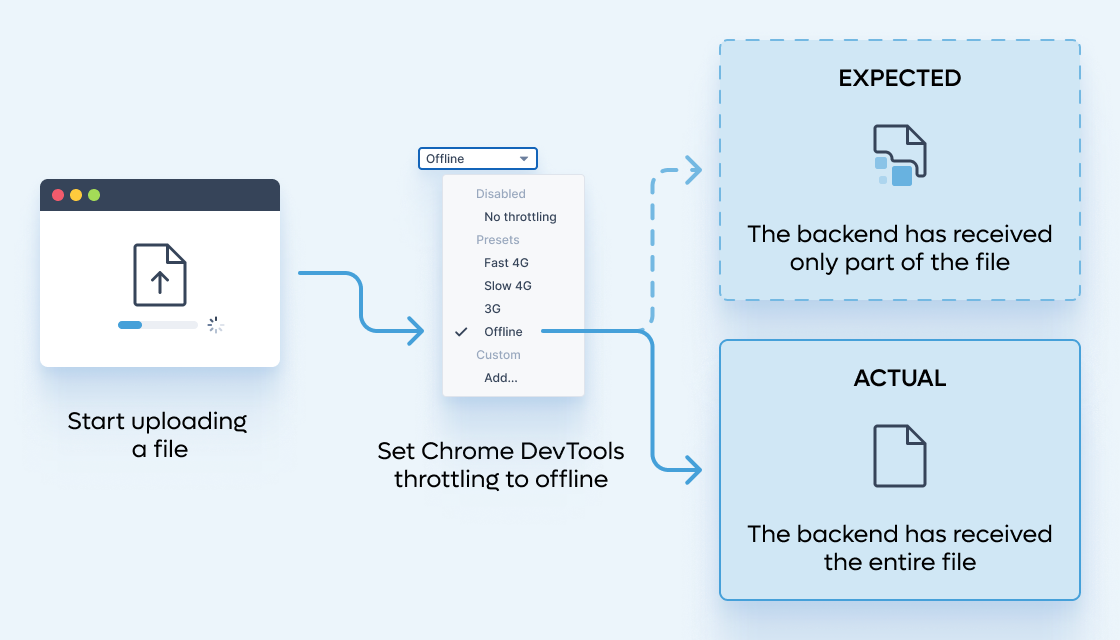

The easiest option would be to set the browser to offline mode, e.g., via the Throttling features of Google Chrome DevTools (Chrome was the primary browser we needed to support). This can also be done in Playwright and uses the same feature under the hood.

However, it turns out this doesn’t work as intended. We attempted to simulate a long-running upload by setting the throttling to a low speed (such as 3G), then to 'Offline’ to simulate disconnection. It appears that when we switch throttling to Offline, Chrome completes the pending request (without throttling it) before going offline. We didn't find documentation on this, but we observed this behaviour consistently.

A solution is to simulate disconnection not through the browser, but by adding a proxy that can be easily switched off. For instance (assuming the frontend and backend are served locally on port 3000), run socat tcp-listen:3001,fork,reuseaddr tcp:localhost:3000 to start a process that listens on port 3001 and proxies to port 3000. You open the web application on port 3001 and start an upload, then kill the socat process. The browser won't be able to reach the tus server anymore (since it's trying to reach in on port 3001, which is no longer proxied), so the tus client should report an error (and then presumably try to resume automatically or prompt the user to resume).

If your application already includes a reverse proxy (e.g., to check authentication), you can disable that instead of using a separate proxy with socat.

One limitation with this approach is that the server seems to react instantly to the lost connection. If the client actually goes offline, the server won't notice this immediately; instead, it will stop receiving new packets.

This makes a difference for tus-java-server, which creates lock files for ongoing uploads (to prevent parallel uploads to the same resource) and deletes them when the connection is closed. In the first case, it will instantly delete the lock file; in the second case, it will delete it only after the Tomcat server closes the connection after a timeout.

This timeout is controlled by server.tomcat.connection-timeout, which, among other things, sets the timeout between the reception of TCP packets in a POST request (see here and here for more on this). The default timeout of 60 seconds was too long for our needs, as we wanted the client to attempt to resume automatically within less than a minute.

When testing this file locking behaviour, therefore, proxying with socat is not equivalent to really disconnecting the client.

The tus protocol and the libraries we selected were a great fit for implementing resumable uploads in our application, and they would likely be suitable for other applications with a similar tech stack that require support for uploading large files. While the protocol itself is simple, we hope these tips on development and testing can help you if you’re implementing this feature.

Tommaso joined Buildo as a full-stack engineer in 2019. He loves functional programming languages (and did a PhD on them) but at Buildo he has broadened his interest to all backend development and software architecture and is now the backend Tech Lead.

Are you searching for a reliable partner to develop your tailor-made software solution? We'd love to chat with you and learn more about your project.