From apps that understand you to interfaces that know you: discover how Sentient Design turns technology into a proactive, discreet partner. Are we ready to trust almost-human software?

The first time I struggled to get a clunky app for a flying company to understand a simple task, I remember thinking, “Why can’t this thing just get what I want to do?” That question led me down a rabbit hole—from learning about Intent-Based UI to eventually discovering Sentient Design through Josh Clark’s talk on Sentient Computing. It felt like the next logical step. Interfaces weren’t just reacting anymore—they were evolving, almost like they were learning who we are.

If Intent-Based UI is about designing interfaces that understand what users want, Sentient Design is about interfaces that understand who users are. It’s the difference between a helpful waiter who remembers your usual order and a personal chef who intuitively crafts meals based on your health goals, mood, and schedule. One responds efficiently; the other proactively curates an experience tailored to you.

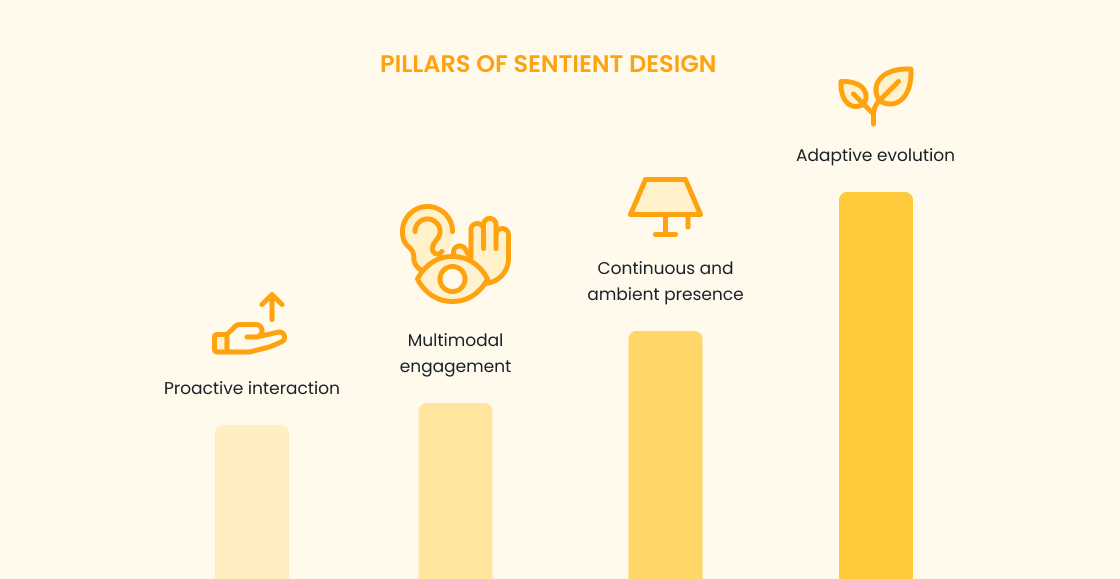

Looking back, user interfaces have always been reactive—waiting for input, then executing commands. Sentient Design shifts this entirely. Instead of treating technology as a passive tool, it embraces the idea of technology as an active participant. It's about crafting experiences that are:

We’re no longer just designing for usability; we’re designing for partnerships between humans and technology.

Based on Josh Clark talk, Sentient design can be identified by five key characteristics:

When I started paying closer attention to real-world examples of Sentient Design, I realized it’s already woven into my everyday life — often in ways so subtle I barely notice. One place it really stands out is in context-aware computing. Wearables, for instance, have evolved far beyond basic step counters. They’re now interpreting what’s happening in our bodies and nudging us toward healthier habits. I remember one moment vividly: I was walking into a high-stakes meeting I’d been stressing about all week, and right then, my smartwatch buzzed and suggested a breathing exercise. At first, I rolled my eyes. But I followed the prompt anyway. And weirdly enough — it helped. Not in a dramatic, life-changing way, but just enough to ground me. It reminded me to breathe, when I probably wouldn’t have otherwise. That quiet, well-timed nudge captured what Sentient Design is really about for me: not flashy features, but small, intuitive moments that actually make a difference.

I’ve also noticed how workplace productivity is starting to feel the impact of Sentient Design in really practical ways. One example that stood out to me recently was during a long meeting—one of those where ideas are flying and action items pile up fast. Normally, I’d be scrambling to take notes and still try to stay present in the conversation. But the AI meeting assistant jumped in, summarizing key points and even suggesting follow-ups. I didn’t have to ask — it just knew what would be helpful. Instead of acting like a passive recorder, it felt like a quiet collaborator, making sure nothing fell through the cracks. And it’s not just in meetings. These context-aware systems are everywhere now. Like my parents’ smart thermostat that started adjusting the temperature before we even realised we were too warm—it picked up on our routine and adapted it without us lifting a finger. These kinds of systems aren’t just reacting anymore; they’re anticipating, smoothing out the rough edges of daily life in ways that feel subtle but significant.

As excited as I am about everything Sentient Design can do, I can’t ignore the part that still makes me uneasy: privacy. These systems need a lot of personal data to actually be helpful—everything from habits and preferences to location and even emotional cues. And while that kind of insight can make experiences feel beautifully tailored, it also raises some tough questions.

I’ve caught myself pausing before granting yet another app access to my data, wondering, Do I really know what it’s doing with this? That’s why transparency isn’t optional—it’s essential. Designers need to be up front: what’s being collected, why it matters, and most importantly, what users get in return. When it’s done right, and data is treated with care, the result can be a deeper, more personal connection with technology—one that feels like a genuine enhancement rather than an intrusion. But that trust has to be earned, not assumed.

Another thing that’s been on my mind is user autonomy. As tech gets smarter, it starts making decisions for us — and not always with our input. There’s a fine line between being helpful and being intrusive. Recently, there’s been a lot of buzz about AI agents that can shop online on your behalf. It sounds handy—like automatically ordering toilet paper before you run out, or refilling your meds because it knows your schedule. But that doesn’t mean we’re all ready to hand over that kind of control.

Yes, it can save time. But what happens when a system makes a decision you didn’t ask for? It starts to feel like you're not the one in charge anymore. There’s a fine line between enhancing convenience and eroding human decision-making. Specific use cases may justify automation, but granting open-ended autonomy to machines risks undermining the very human-centered values that good design should protect. Designers must tread carefully, ensuring that sentient systems support — not substitute — human judgment.

Another issue is emotional manipulation. Sentient systems are getting really good at reading us— our tone of voice, facial expressions, even how we type. On paper, that sounds amazing. A device that knows when you're stressed and suggests a break? Helpful. But it gets murky fast. What if that same emotional insight is used to nudge you toward buying something you don’t really need, just because you’re feeling low? Or worse, what if it’s shaping what you see online to keep you emotionally hooked? We’ve already seen versions of this with algorithmic feeds on social media, and it’s easy to imagine how a more “sentient” version could go even deeper. That’s why it’s so important that emotional intelligence in design comes with clear boundaries. Empathy from machines should feel supportive, not manipulative.

Then there’s the challenge of overdependence, and I’ll be honest — it’s one I catch myself falling into. When technology is this good at anticipating your needs, it’s tempting to just let it handle everything. But that ease comes at a cost. I’ve noticed I barely remember phone numbers anymore, or even how to get around my own city without GPS. Now imagine that on a larger scale: a world where people no longer question recommendations from a system because “it knows me better than I do.” Whether it's choosing what to eat, when to rest, or even who to date, the line between assistance and autopilot starts to blur. Sentient systems should make our lives easier, not numb us to our own choices. Otherwise, we risk designing convenience into complacency.

The more I explore Sentient Design — or whatever name you prefer: empathetic interfaces, adaptive experiences, even context-aware design — the more I feel like we’re standing at the edge of something big. We’re not just improving interfaces anymore — we’re rethinking our entire relationship with technology. And it’s not about faster or flashier; it’s about something deeper: empathy.

I keep thinking about how the best experiences with tech are the ones that just fit — the ones that feel like they know you, but don’t crowd you. Systems that are smart enough to help, yet humble enough to step back.

But getting there means asking the hard questions. How do we build systems that understand us without overstepping? How do we make people feel supported, not surveilled? That’s the balance we have to strike — and honestly, it’s not a one-size-fits-all answer.

Maybe what I’m really hoping for isn’t just better tech — but more human tech. Thoughtful. Respectful. A little more like us. And if we get this right? I think the future of design won’t just be smarter — it’ll feel more like a conversation worth having.

Livia is a designer at Buildo, with competence in UI, UX, and design systems. Her Brazilian background adds a valuable layer of cultural diversity and perspective to the team. She is driven by her passion for research and collaborating with others to deliver top-quality projects.

Stai cercando un partner affidabile per sviluppare la tua soluzione software su misura? Ci piacerebbe sapere di più sul tuo progetto.